What is Load Balancing?

In this article, I will discuss the possible benefits of load balancing your web hosting setup. However, before considering whether a load balancer is needed, we should ensure we’re all on the same page when it comes to understanding the function of a load balancer. In its most basic form, a load balancer is a server that distributes network traffic to a series of backend servers.

It does this using a few different predetermined patterns, known as load balancing algorithms, and commonly uses either the round-robin or least connections algorithm:

- Round-Robin – Requests are distributed between the backend servers sequentially (one after the other).

- Least Connections – New requests are sent to the backend server with the fewest current connections.

By distributing network traffic to a pool of backend servers, you can dramatically improve concurrency. In the case of WordPress, this is the number of users your site can handle at the same time.

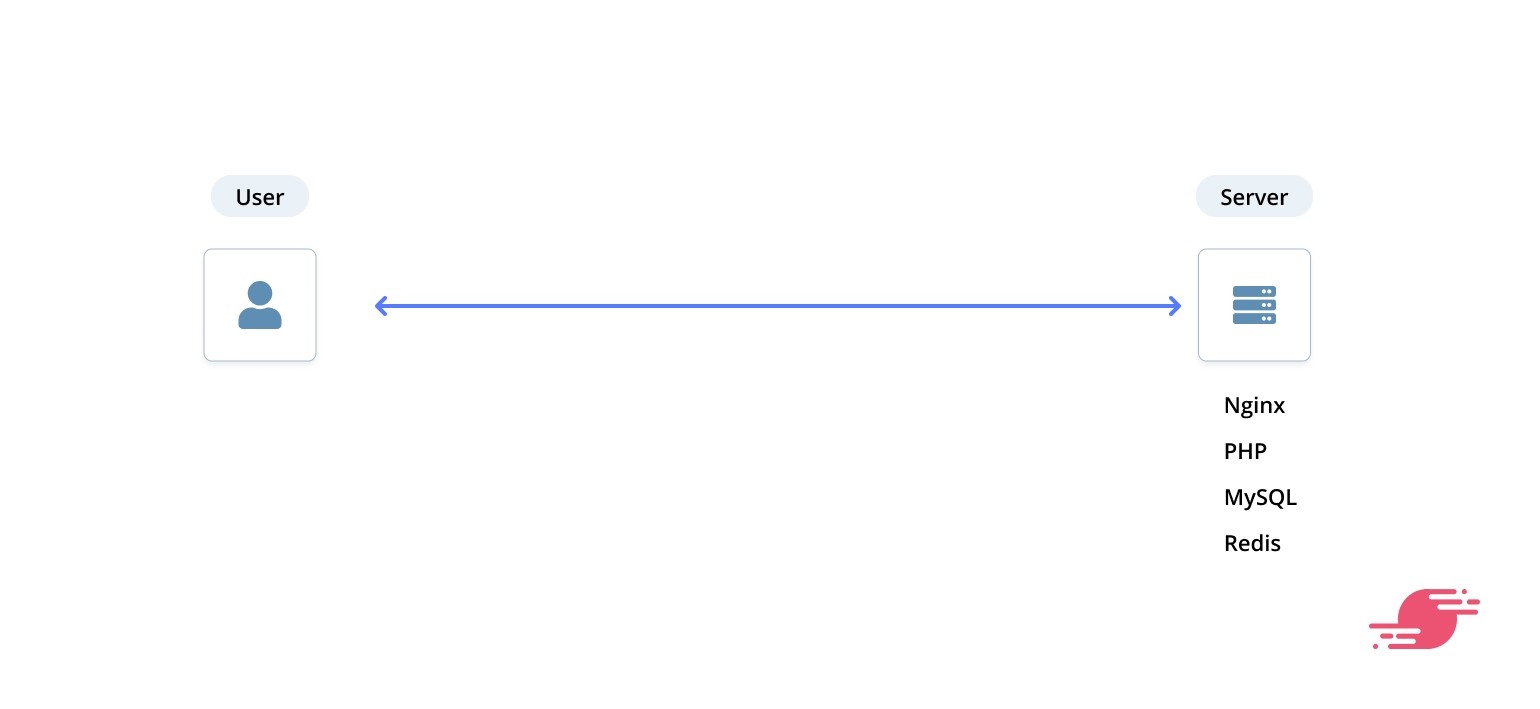

Let’s look at a typical server setup geared towards WordPress:

This is the traditional WordPress hosting setup we’re used to:

- a single server with a fixed IP address

- web server software (usually Nginx or Apache) installed to serve a website from the server file system

- PHP and MySQL/MariaDB installed, as per the WordPress requirements

- Redis installed for object caching

DNS is managed either by the server provider or through a third-party service like Cloudflare, which points the website’s domain name to the server IP address. To help keep the site traffic secure, an SSL certificate is installed on the server.

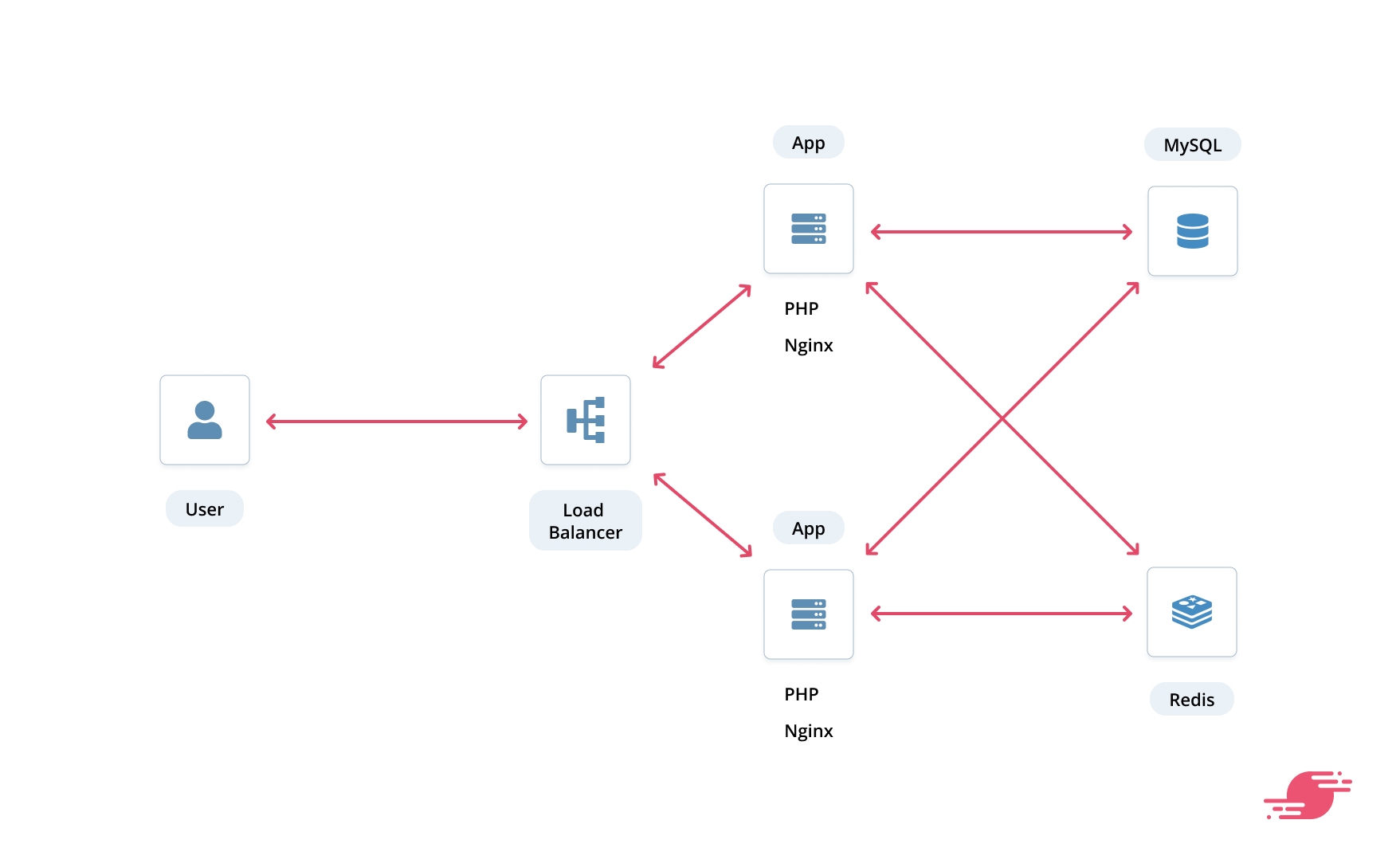

In a load-balanced setup, we extract the various server components to their own dedicated servers, resulting in a setup similar to this:

This setup implements a load balancer in front of one (or more) application servers that each have copies of the application codebase installed. The DNS is updated to point the domain name to the load balancer via its public hostname or IP address.

The SSL certificate is installed on the load balancer. It handles the routing of requests to one of the available application servers, which can read and write from the servers dedicated to managing the MySQL database and the Redis object cache.

This load-balanced setup introduces a couple of benefits:

- Each server has fewer responsibilities, meaning that they usually have fewer software packages installed. This often results in each server being more efficient because their CPU cycles are reserved for a single task. In a single server setup, Nginx, PHP, and MySQL will all be fighting for CPU time to handle a request.

- Each part of the system can be scaled independently of the other. If your database server becomes a bottleneck, you can increase the size of the database server or add additional replicas. Likewise, if your site sees an increase in concurrent users and your application servers can’t keep up, you can add more application servers.

However, a load-balanced setup does have its drawbacks:

- It’s costly. The act of extracting each server component to its own server means that you will be paying for more servers.

- It’s more complicated. Handling a single web request involves many moving parts, and those parts increase as you add more servers.

You can alleviate the complexity problem somewhat by opting for managed services. DigitalOcean offer their own managed database servers and load balancers and AWS also offers both managed database servers and load balancers. While these services decrease complexity, they incur additional costs, which takes us back to point #1.

Now that we understand the basics of load balancing and the pros and cons let’s look at a few common load balancer misconceptions and perform some benchmarks!

Load Balancing Misconceptions

Instant Performance Win

Throwing a load balancer into your hosting setup won’t instantly boost application performance or throughput. That’s because when you split your application services across multiple servers, you introduce network latency. Even when your servers are located in the same data center, a series of network connections must be made between each server to handle every request. Typically, your application servers will need to communicate with your database and object cache servers.

To see a performance gain from load balancing, your existing single server setup will need to be under strain. Meaning, if your current server is happily sitting at 20% utilization, load balancing will probably be marginally slower per request. However, once you reach a level of concurrent users that your single server is unable to handle, throughput will start to decrease, and response times will increase.

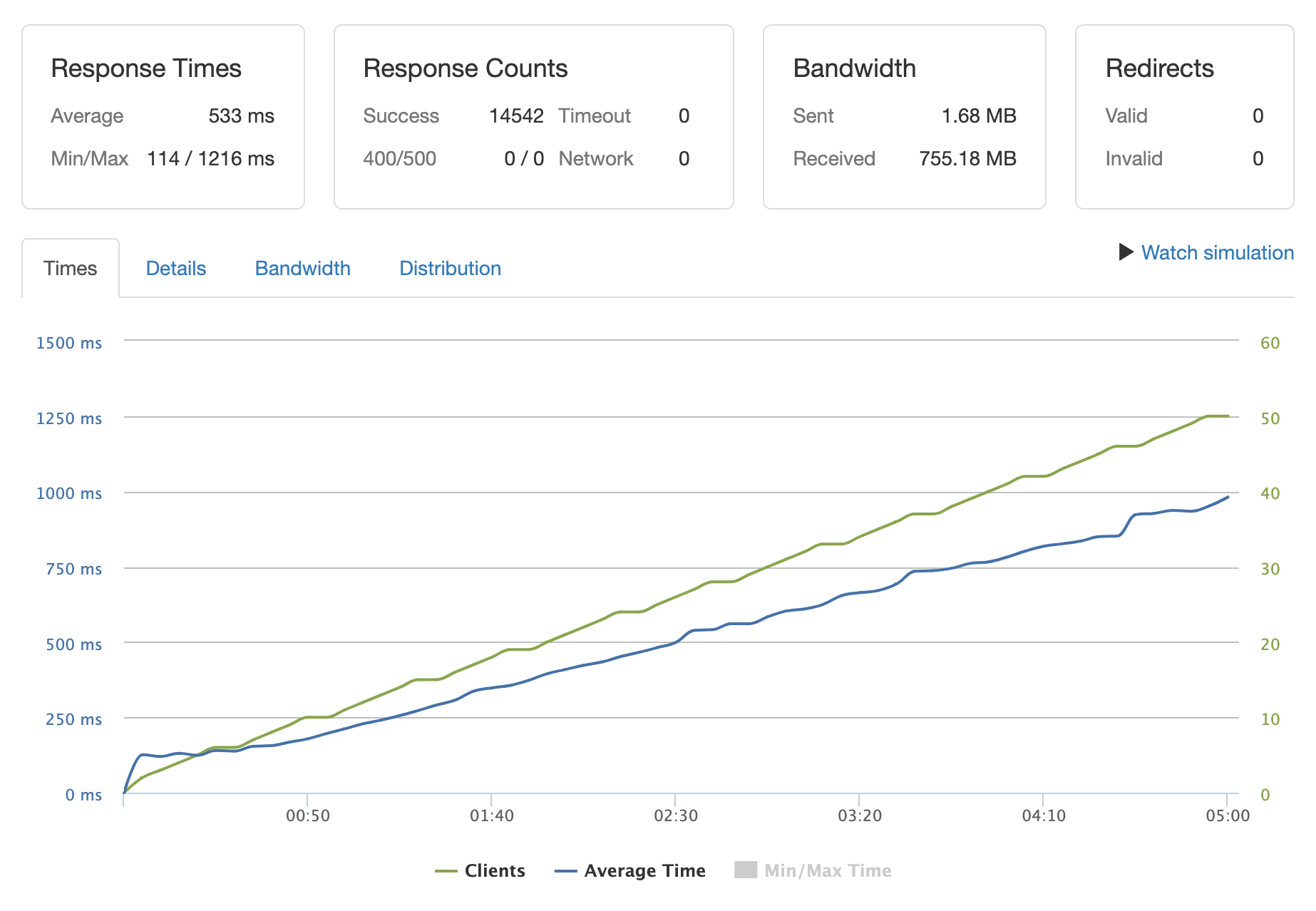

We can test this for ourselves using Loader, which is an application load testing tool. Here, I’ve provisioned a 4 GB RAM, 2 vCPUs server on AWS Lightsail and connected it to SpinupWP (see our doc How to Install WordPress on AWS Lightsail for step-by-step instructions). I’ve deployed a single site to the server with HTTPS enabled, page caching disabled, and Redis object caching enabled. I’ve then tested the site over 5 minutes, with a concurrency level that grows from 1 to 50 users:

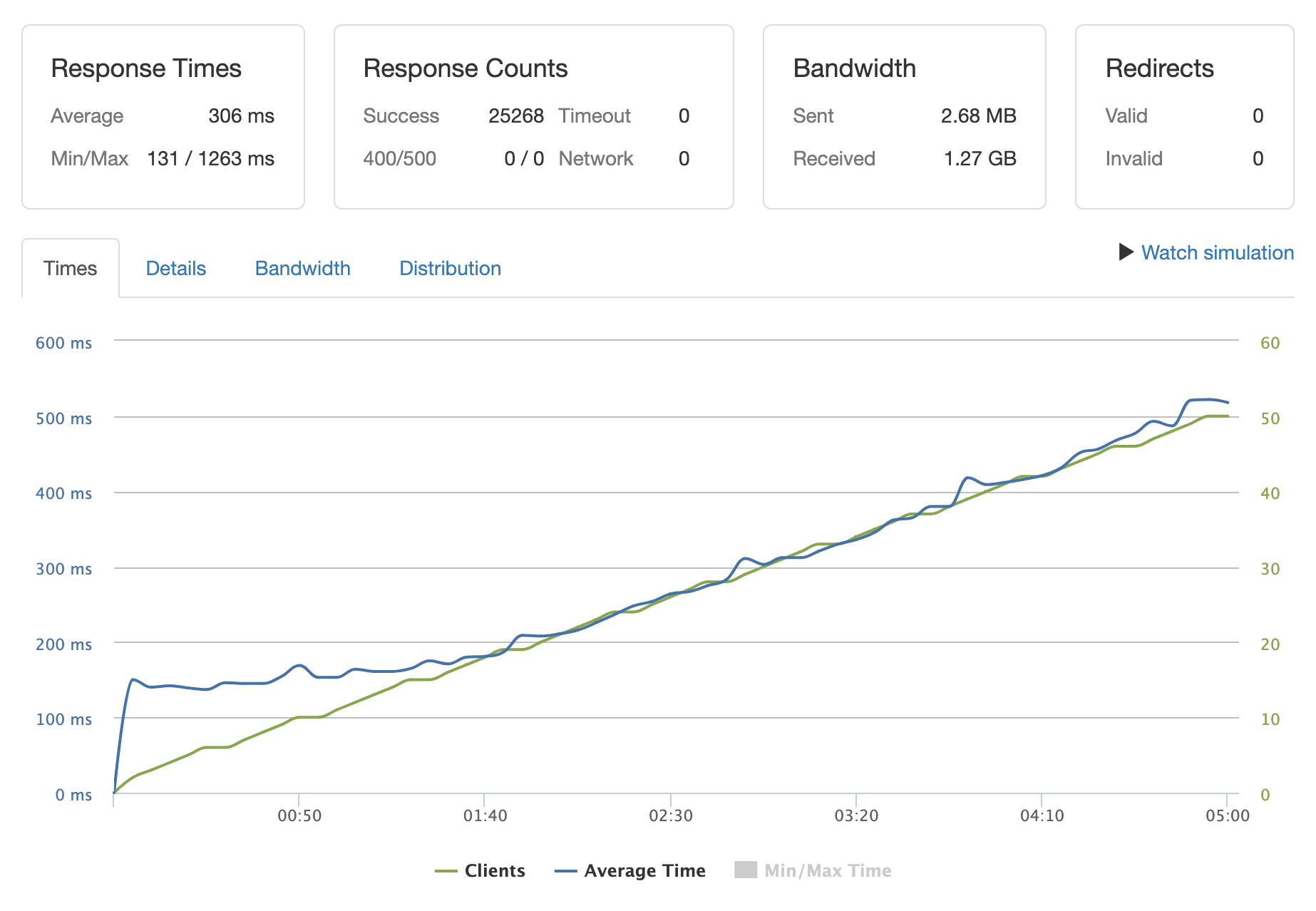

For the load-balanced configuration, I’ve deployed the following setup using Amazon Lightsail:

- Amazon Lightsail load balancer

- Application servers – 2 x 4 GB RAM, 2 vCPUs servers

- Object cache – 1 GB RAM, 1 vCPU server

- Database – 4 GB RAM, 2 vCPUs managed database

The application servers are provisioned using SpinupWP but have had both MySQL and Redis removed. The object cache server has nothing but Redis installed. Once again, I’ve deployed a single site to the application servers with HTTPS enabled, page caching disabled, and Redis object caching enabled. HTTPS termination is handled at the load balancer, not at each application server. I’ve then tested the site over 5 minutes, with a maintained client load from 1 – 50 (as above):

The average response time is much lower on the load-balanced configuration (306 ms compared to 533 ms). However, the single server outperforms the load-balanced configuration until it hits around 8 concurrent users. The load-balanced setup is able to handle more than 8 concurrent users with much better response times than the single server.

Auto Scaling

Auto-scaling is the ability for your server infrastructure to scale up or down based on concurrent requests. This can help limit some of the costs of running a load-balanced configuration by only having enough servers standing by to handle the current workload.

One common example of this is auto-scaling your application servers. You can configure your load balancing setup to create new application servers when the number of requests is above a certain threshold (more than x requests per application server). Similarly, when the number of requests drops, application servers that are no longer needed can be stopped and deleted. Without auto-scaling, your infrastructure would need to always run with the capability of handling peak traffic.

Load balancing isn’t auto-scaling. However, load-balancing is required in an auto-scaling infrastructure. Just because you have a load balancer installed, your stack isn’t going to grow automatically as the number of concurrent users increases.

High Availability

High availability is another hosting buzzword, which usually means 100% uptime. This is a common requirement for business-critical sites, such as busy e-commerce stores. Similar to auto-scaling above, load balancing alone doesn’t grant you high availability.

Like in a single server setup, there are still multiple points of failure in a load-balanced configuration. For example, if a single application server were to fall over, your site would likely continue to work (because other app servers are available to take up the slack). However, the load balancer itself, database server, and object cache server remain possible points of failure. You can somewhat alleviate this issue by opting for the managed services mentioned above. Both Amazon Lightsail and DigitalOcean offer managed database servers with high availability options, but you will pay a premium for the added redundancy.

Do We Use Load Balancing?

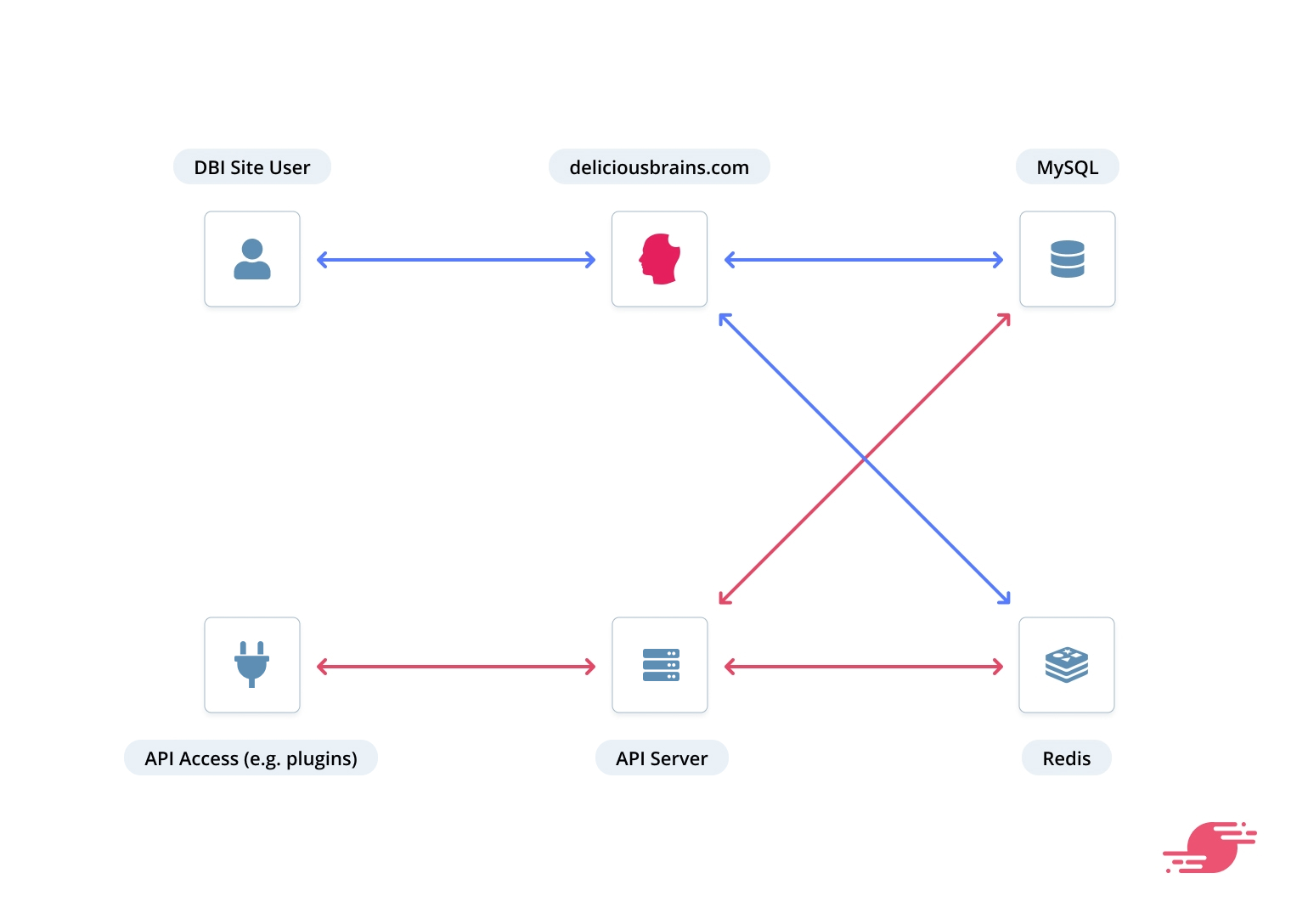

Our site that gets the most traffic is deliciousbrains.com. We don’t implement a load balancer on this site, but we do split the API traffic from the main web site traffic by having two domains: deliciousbrains.com and api.deliciousbrains.com. Each of these domains point to a separate application server running the same WordPress code. We have one managed MySQL database server and one managed Redis server, which handles databases and object cache for both application servers.

You can configure an external MySQL database via the SpinupWP dashboard but not an external Redis server. Currently that needs to be done manually, but we’re considering adding it to SpinupWP as well. Because we’re able to manage our application servers via the SpinupWP dashboard, we know there are good caching policies in place. Finally, we offload our media and asset files using WP Offload Media and serve them via a CDN.

We’ve chosen this configuration because it allows us to scale our website and API independently, and also provides for future flexibility. If either our website or API started seeing a large increase in traffic, it would not be complicated to implement a load balancer and more application servers for either service.

Should You Implement Load Balancing?

Load balancing certainly has its place when it comes to extremely high-traffic WordPress sites. However, load balancing shouldn’t be the first solution you reach for when ensuring your WordPress site can handle significant traffic.

First, you should ensure your site is being cached correctly by following our WordPress Caching: All You Need To Know guide. Implementing page caching and then object caching can often resolve issues that occur during peak traffic times.

If caching isn’t an option or you’re still hitting performance issues, consider the benefits of vertically scaling your server hardware. Sometimes, simply adding an extra CPU core or a few GBs of memory can have a profound impact on your site’s ability to handle load.

In many cases, the need to increase CPU cores or memory is due to one component of your website, not all of them. In this instance, consider horizontally scaling your infrastructure. For example, if your PHP processing is fast but your database queries are slow, move your database to a separate server. If you’re serving a lot of media (images/videos), move them to an offsite location and serve them via a CDN. The goal is to reduce the load on your web server by moving the components that need more resources to their own environment. This is the setup we’re using, and it works well.

Only once you’ve exhausted all those options, would we suggest that you consider load balancing.

Have you implemented load balancing for your WordPress site? Did you try vertically or horizontally scaling your infrastructure first? Let us know what challenges you faced in the comments below.