Database indexing is an often forgotten but valuable practice when managing custom tables. Just like the index of a book, an index greatly improves the speed of retrieving data from your database tables. It should be the first thing you think about when you create a custom table.

In this tutorial, I’ll show you how to optimize databases with indexing, and highlight how not having indexes can affect your WordPress website’s performance and hence its search engine ranking and user experience.

Ensuring your custom tables are properly indexed shows results. In a recent test of 1 million records, querying about a hundred thousand records at a time, I saw a decrease in query time of around 150% when implementing indexes!

Why You Might Need to Add Indexes to Your WordPress Database

Many WordPress developers aren’t very familiar with database table indexing. After all, the default WordPress database tables are already indexed correctly, optimizing load times for complex searches such as auto drafts, trashed posts, unapproved comments, etc.

The official plugin developer handbook doesn’t mention custom tables and recommends using custom post types. However, we know that using custom post types has its limitations. In some cases, it’s more beneficial to create a custom table to store plugin data. The original WordPress Codex still has a guide on Creating Tables with Plugins. The irony is if you’ve ever created a table in MySQL using this method with a primary key column (normally called id), MySQL has actually created an index for that column (called PRIMARY by default).

There’s a valuable piece of information missing from this analysis that many WordPress developers don’t consider: adding table indexes to fields that you might run WHERE or JOIN queries on.

It’s important to evaluate the situation before choosing to add indexes. Tables with few records won’t see much benefit from indexes as MySQL can read the entire table into memory quickly and queries that do full table scans won’t take long.

On the other hand, if a table contains a lot of data and there isn’t a high volume of write queries (i.e., INSERT and UPDATE queries), indexes can give a huge boost to SELECT query performance, avoiding full table scanning. A WooCommerce site with tons of orders accumulated over many years is a good example.

If you have a table that gets a high volume of write queries, adding indexes will slow down those write queries as the indexes need to be rebuilt every time a row is added or updated. A table populated with usage data received from thousands of plugins is a good example of this.

You should consider table indexes when you are trying to optimize a site with slow load times. Generally, when sites are slow to respond, people reach for caching solutions or load balancers. These are powerful tools for reducing the number of queries for pages that can be cached, but some pages can’t be cached and it’s important to optimize performance for those pages as well.

To show you an example of what I’m talking about, I’ve created some custom tables via a plugin in my local WordPress site to manage records for an online book store, with the following structure.

CREATE TABLE `wp_hfm_categories` (

`id` mediumint NOT NULL,

`slug` tinytext NOT NULL,

`description` text NOT NULL,

`title` text NOT NULL

);

CREATE TABLE wp_hfm_books (

id mediumint(9) NOT NULL AUTO_INCREMENT,

category_id mediumint(9) NOT NULL,

author_id mediumint(9) NOT NULL,

title tinytext NOT NULL,

description text NOT NULL,

url varchar(100) DEFAULT '' NOT NULL,

PRIMARY KEY (id)

) ;

I’ve populated the wp_hfm_categories table with a thousand records and the wp_hfm_books table with 1 million book records. This means there are about 100,000 books per category.

Using some PHP, I’m going to benchmark how long it takes to query the data for all the books in the first category.

<?php

$time_start = microtime( true );

$connection = mysqli_connect( "localhost", "root", "password", "wordpress" );

$query = "SELECT * FROM wp_hfm_books WHERE category_id = 1";

$time_end = microtime( true );

$setup_time = round( $time_end - $time_start, 5 );

$time_start = microtime( true );

$result = mysqli_query( $connection, $query );

$time_end = microtime( true );

$query_time = round( $time_end - $time_start, 5 );

if ( ! $result ) {

echo "Query failed!" . '</br>';

die();

}

$time_start = microtime( true );

$book_list = '';

while ( $book = $result->fetch_object() ) {

$book_list .= '<p>' . $book->title . '</p>';

}

$time_end = microtime( true );

$execution_time = round( $time_end - $time_start, 5 );

echo "Setup in {$setup_time} seconds!" . '</br>';

echo "Query in {$query_time} seconds!" . '</br>';

echo "Books gathered in {$execution_time} seconds!" . '</br>';

echo $book_list;

The final execution results:

Setup in 0.00031 seconds!

Query in 0.53784 seconds!

Books gathered in 0.00497 seconds!

As you can see from the results, the database interaction accounts for almost all page load time, and has exceeded 0.5 seconds at the 1 million record mark.

What I’ve Seen in the Real World

Hypothetical examples are all well and good, but the benefit of indexes is something you’ll only really appreciate when you’ve experienced not having them on a production WordPress website or application. This happened to me when I worked at a podcast hosting service.

The feed is an important element of any successful podcast. This is the XML data submitted to platforms like Apple Podcasts, Spotify, and Amazon Music. Each platform queries the RSS feed at regular intervals, checking for new episodes in the feed, and updating the show on their platform.

Now, if you’re a podcast show owner, and you host your own feed, it’s probably not going to be a problem. However, things start to get interesting if you’re a podcast hosting service with thousands of shows, often with more than one feed per show.

As the business grew, we started having issues where the RSS feeds for larger shows weren’t updating correctly. The first fix was to implement Redis caching on the feeds. This worked for a while, but the problem soon came back as more shows and episodes were added. During a brainstorming session, one of the developers noticed that the tables being queried to build the feed were not correctly indexed. We spent about half an hour confirming the correct indexes and adding them. Immediately the query time dropped to almost a tenth of the original time, and the issue disappeared.

Let’s see how our books example fares in a more realistic environment. I’ve created a page template in a WordPress child theme that outputs some data for each book.

<?php

/**

* Template Name: Books Template

*

* @package WordPress

* @subpackage Twenty_TwentyTwo_Child

* @since 1.0.0

*/

$execution_time = 0;

$time_start = microtime(true);

get_header(); ?>

<header class="page-header alignwide">

<h1 class="page-title">Books</h1>

</header><!-- .page-header -->

<?php

$books = hfm_books_get_books();

foreach ( $books as $book ) {

?>

<article id="post-<?= $book['id'] ?>" <?php post_class(); ?>>

<header>

<h1><a href="<?= $book['url'] ?>"><?= $book['title'] ?></a></h1>

</header><!-- .entry-header -->

<div>

<?php echo $book['description']; ?>

</div><!-- .entry-content -->

</article>

<?php

}

get_footer();

The function that queries the books uses the same query as our earlier test:

function wp_books_get_books() {

global $wpdb;

$query = "SELECT * FROM wp_hfm_books where category_id = 1;";

return $wpdb->get_results($query, ARRAY_A);

}

The time to generate the page content on my local development environment is 2.79 seconds, the total query time is 0.52 seconds (because of all the other queries being run to render the page), and the amount of memory consumed is 20 megabytes.

Now you’re probably thinking to yourself, “Ok, but I can speed all this up by adding pagination, and limiting results per page.” You’re not wrong. However, let’s say you show 10 records per page. You might speed up the individual page request, but can that same request handle 1,000 concurrent users? What about 10,000? What about 1,000 users each requesting the books for each of the 1,000 categories? That’s effectively 1 million records!

Now think about storing and querying additional book data, maybe author information in an authors table, or if you need to query a files table for digital versions of the book. Eventually, as it did in my podcasting scenario, it all adds up.

How to Inspect Slow WordPress Database Queries

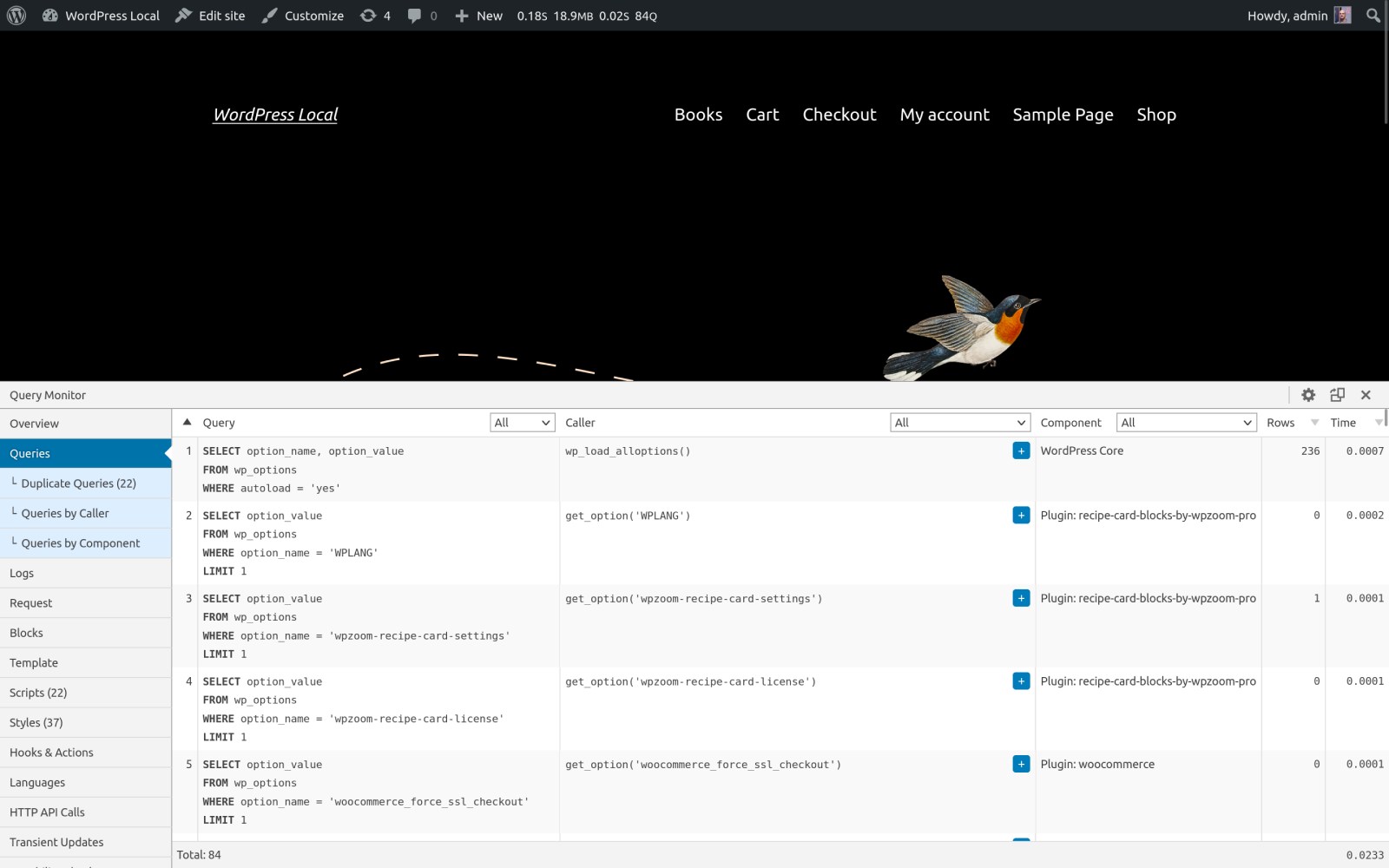

There are two ways to determine if database queries are at the root of the problem. If you’re working with a WordPress website, you can install the Query Monitor plugin by John Blackbourn. This plugin adds a host of developer tools to the WordPress dashboard, but its main feature is the ability to view all the database queries that make up a page request.

If for whatever reason you can’t install this plugin, or you’re not working with a WordPress site, you can enable the MySQL slow query log. This will write a log of any queries that take longer than the value of the long_query_time MySQL variable, which defaults to 10 seconds. You can enable this log by connecting to your MySQL terminal, and enabling the slow_query_log MySQL variable.

SET GLOBAL slow_query_log = 'ON';

You can also adjust the long_query_time global to a shorter window, as opposed to the default of 10 seconds:

SET GLOBAL long_query_time = 1; ## log any queries slower than 1 second

This log file can be reviewed manually or by using the mysqldumpslow command.

Once you know that the database queries are the problem, you might try some of the common database cleanup suggestions:

- Cleaning out unnecessary data from WordPress tables. Often, this is due to data left behind by uninstalled WordPress plugins such as plugin settings, options, and metadata left in the

wp_options,wp_posts,wp_postmetaandwp_commentmetatables, and custom tables not deleted when the plugin is removed. Unnecessary data can also include unused taxonomies. The default WordPress taxonomies are tags and categories. You can see both of these in the Posts menu of your WordPress admin. - Deleting data you don’t need anymore, like spam comments, post revisions, unused tags, transient options, etc.

- Installing and using database optimization plugins like WP-Optimize or Advanced Database Cleaner for automatic cleanups. Both also offer options for multisite support.

- Using the MySQL

OPTIMIZE TABLEcommand. - Changing table storage engines.

- Disabling things like pingbacks and trackbacks.

While these are worth considering for more generic WordPress data-related problems, there’s only one way to fix unindexed custom tables and that’s to add indexes.

Diagnosing a WordPress Database Index Problem

Let’s go back to the books data I showed you earlier, and imagine that you’ve discovered the books queries are slowing things down.

To begin diagnosing this issue, you’ll need the raw SQL query:

SELECT * FROM wp_hfm_books where category_id = 1;

Logging into the MySQL terminal and running this query takes 0.48 seconds on my machine. To see what’s taking so long, we can use the MySQL EXPLAIN statement which will help us understand what is happening behind the scenes. Run the query again but add explain to the beginning:

EXPLAIN SELECT * FROM wp_hfm_books where category_id = 1;

Running this query will give you a bunch of information, but the important fields to look for are the possible_keys and key fields. This tells you which indexes (keys) are available for this query and which index is actually used. It’s also important to note that the rows field tells us how many rows were scanned during this query. So when I run this for our query I get the following results:

+----+-------------+--------------+------------+------+---------------+------+---------+------+--------+----------+-------------+ | id | select_type | table | partitions | type | possible_keys | key | key_len | ref | rows | filtered | Extra | +----+-------------+--------------+------------+------+---------------+------+---------+------+--------+----------+-------------+ | 1 | SIMPLE | wp_hfm_books | NULL | ALL | NULL | NULL | NULL | NULL | 976402 | 10.00 | Using where | +----+-------------+--------------+------------+------+---------------+------+---------+------+--------+----------+-------------+ 1 row in set, 1 warning (0.00 sec)

Notice that, in this case, no indexes were being used and we had to scan through 976,402 rows! No wonder the query took so long.

Adding a WordPress Database Index

Now that the issue has been isolated as an index problem, let’s go ahead and add an index to the category_id column for the wp_hfm_books table. There are a few ways you can do this.

The first way is to add the index directly to the table. This can be achieved either by running an ALTER TABLE query in the MySQL terminal, or using a MySQL database management tool like TablePlus, or via phpMyAdmin. The ALTER TABLE query for the books table would look like this:

ALTER TABLE wp_hfm_books ADD INDEX category_id_index (category_id);

This adds an index called category_id_index on the category_id field.

If you’re working with WordPress custom tables then it’s a good idea to add the indexes to your installation function that creates the table. This is achieved by adding the INDEX after the PRIMARY KEY in the query:

CREATE TABLE wp_hfm_books (

id mediumint(9) NOT NULL AUTO_INCREMENT,

category_id mediumint(9) NOT NULL,

author_id mediumint(9) NOT NULL,

title tinytext NOT NULL,

description text NOT NULL,

url varchar(100) DEFAULT '' NOT NULL,

PRIMARY KEY (id),

INDEX category_id_index (category_id)

) ;

If this table is already used in one (or more) production environments, you’ll need to implement an upgrade step that runs the ALTER TABLE query. To manage this, we recommend implementing and using our migrations library, inspired by Laravel migrations.

Speaking of Laravel, if you need to do this in a Laravel app, you can do so by creating a new migration and adding an index to the table using the $table->index() method. Please note that it’s always best to take a full database backup before running any queries which alter tables.

Schema::table('books', function (Blueprint $table) {

$table->index('category_id', ‘category_id_index’);

});

Depending on how many records you’re indexing, this could take a little while, but the wait is worth it.

Reviewing the Improvements

So, what impact does the index have on the query? If you run it again, you should see a marked improvement. In my local environment, it dropped the time taken for the query down to 0.08 seconds. That’s five times faster than before! If you run the EXPLAIN statement again, you should see something like this:

+----+-------------+--------------+------------+------+-------------------+-------------------+---------+-------+-------+----------+-------+ | id | select_type | table | partitions | type | possible_keys | key | key_len | ref | rows | filtered | Extra | +----+-------------+--------------+------------+------+-------------------+-------------------+---------+-------+-------+----------+-------+ | 1 | SIMPLE | wp_hfm_books | NULL | ref | category_id_index | category_id_index | 3 | const | 10189 | 100.00 | NULL | +----+-------------+--------------+------------+------+-------------------+-------------------+---------+-------+-------+----------+-------+ 1 row in set, 1 warning (0.00 sec)

You can see that the query is now using the new category_id index that you just created and by using this new index the number of rows it has to scan has dropped from 976,402 to 10,189. Success!

It’s worth noting at this point that your mileage may vary when adding indexes to database tables. The performance gain will depend on not only the amount of data in the table, but also the type of query being run. As a side note, we could have just added category_id as a foreign key to the table. Foreign keys automatically index the column, plus they enforce data integrity. As foreign key constraints aren’t used by WordPress core you may want to avoid them just to keep things consistent.

Compound Indexes

As you might expect, there is a bit of an art to adding database indexes. For example, if you have multiple WHERE clauses in your query it might make sense to add a multi-column (compound) index which includes every field in your WHERE clause. Say you are also storing author data which you need to filter by, and your query is:

SELECT * FROM books WHERE category_id = 1 AND author_id = 1;

You could add a compound index like this:

ALTER TABLE wp_hfm_books ADD INDEX by_category_and_author_index (category_id, author_id);

If you’re filtering on multiple fields, running queries with a compound index improves the performance of the query by about 50% again. However, be aware:

- Adding more indexes will increase the size of your database and the RAM usage of your database (as MySQL tries to store indexes in memory).

- MySQL is smart enough to use indexes even though the index fields might not include every field in your

WHEREclause. If I added both indexes above, anEXPLAINwould show the singlecategory_idindex that would be used instead of the compound index, even though I had multipleWHEREclauses in my query. - MySQL can use multiple indexes if it needs to.

- The order of the fields in a compound index can also have an impact. Generally, they should be ordered from most common to least common.

So you might still see a performance gain without adding more compound indexes. Using EXPLAIN is your friend here. It will show you what MySQL does behind the scenes.

Wrapping Up

The books/categories example I’ve used here is very simplified but the speed increases from adding indexes on one table column illustrate the point. When you are filtering or joining data based on specific fields, adding indexes to those fields is a crucial step in ensuring your queries are optimized.

Have you experienced a situation where adding indexes resolved performance-related issues? Perhaps this is the first time you are learning about table indexes, and you’re keen to implement them? Let us know in the comments.